The winners in today’s data-driven economy will be the organizations that can quickly, accurately, and consistently glean profitable business insights from their enterprise data warehouse. This means that robust data governance and analysis is one of the most valuable abilities a company can invest in.

Yet data science is often plagued by questionable data integrity. According to Paxata, data scientists spend only 12% of their time on data analysis, as much as 42% of their time on data profiling and preparation, and another 16% on data quality evaluation. Data is growing faster than their ability to manage it, making it increasingly difficult and time-consuming to bring multiple disparate data sources together while maintaining data integrity and quality.

Luckily, there is a relatively simple solution to getting a handle on your data governance challenges without having to undergo extensive data migrations or invest in new business intelligence (BI) tools: Intelligent data virtualization.

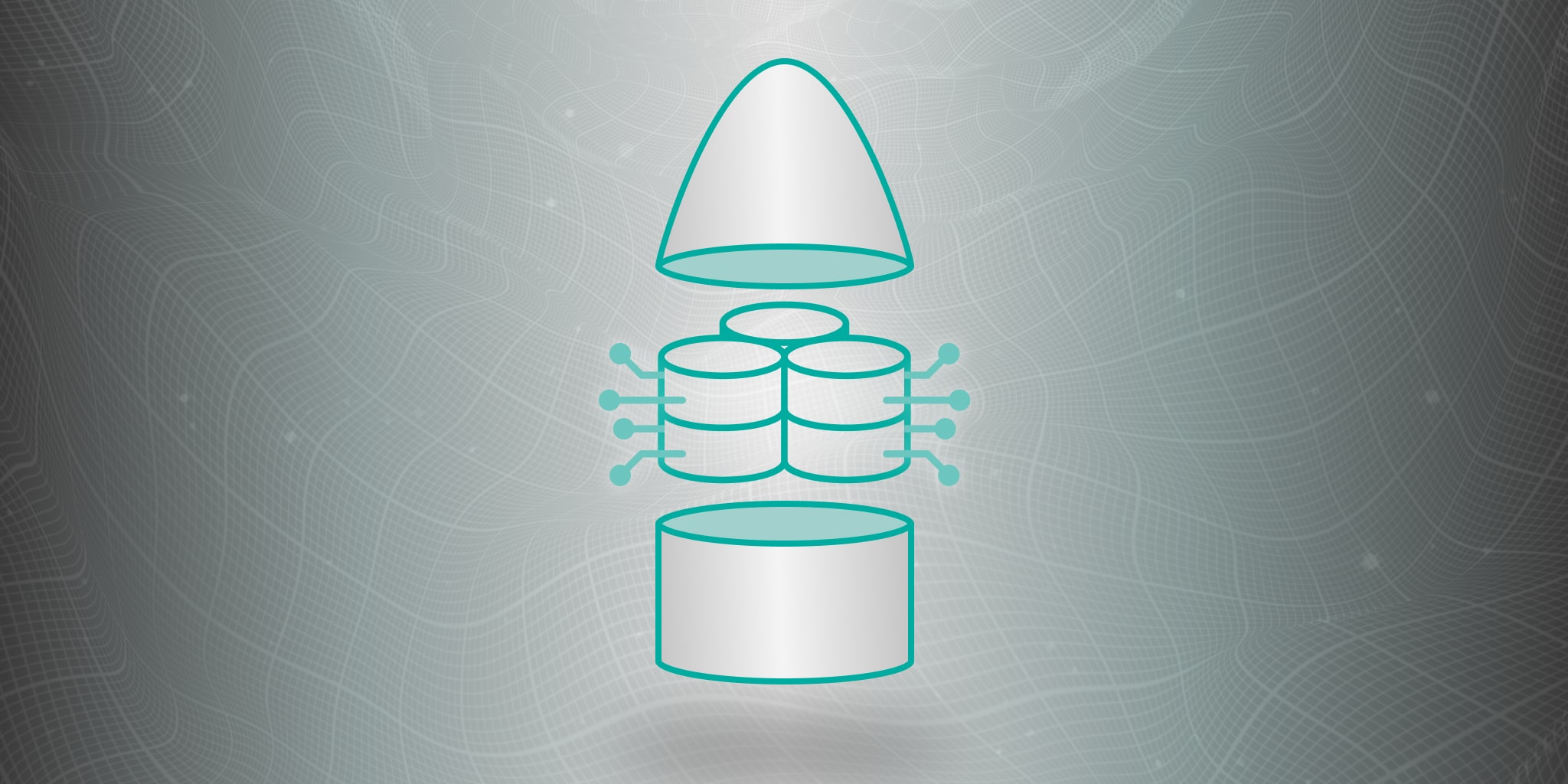

Intelligent data virtualization effectively turns large disparate pools of data into a single, logical representation of information, serving as a catalyst for analyses of all kinds—from Business Intelligence (BI) to Artificial Intelligence (AI) and Machine Learning (ML). This both simplifies and greatly improves data governance in four primary ways:

- Engineers compliance with regulatory requirements

Government regulators are implementing laws to protect the privacy and integrity of data related to citizens. These include regulations such as GDPR and the California Consumer Privacy Act that goes into effect in 2020. GDPR, for example, contains a requirement called “Privacy by Design,” where data systems must be engineered to secure data from exposure or leakage by default.

Intelligent data virtualization is the perfect vehicle for implementing Privacy by Design. All of the existing security solutions and policies governing your data remain in place. That means queries are governed to return only data reflecting the privileges the user has with each constituent database. Furthermore, working with virtualized data eliminates the need to create extracts, greatly reducing the chance data will be mishandled. - Creates a single logical interface within an increasingly distributed world

Intelligent data virtualization establishes a single source of truth (SSOT) in the enterprise data warehouse. With one location that is easily accessible and known to be reliable, users don’t have to create their own local extracts and private data stores. Multiple datasets from different data sources can be easily integrated, facilitating a wider view of company data. Data analysts can leverage a superior shared data intellect and make recommendations with the confidence that they are in alignment with their colleagues. - Ensures agile, accurate access to data

Intelligent data virtualization applies machine learning to queries to build optimized aggregates that return results many times faster than the original data sources. Queries return in seconds or minutes instead of hours or days, making it not only faster to derive insights, but easier to experiment with new avenues of research. - Provides consistent results across all analytical tools

Different departments will naturally develop their own preferences in BI tools. The challenge with supporting a variety of BI tools is consistency—different BI tools will generate queries and display data in slightly different ways, generating divergent results even when the underlying data is the same. Intelligent data virtualization enforces consistency is by normalizing for the differences in BI tools. When querying a virtualized data store, different BI tools such as Microsoft Power BI and Tableau will return the same answer, thanks to the semantic translation provided by the virtualization system.

Data governance is never static

Ultimately, the point of good data governance is to produce fast, accurate, and consistent insights. This requires continuous adaptation to new compliance, business, and security challenges. Intelligent data virtualization enables organizations to achieve this without breaking the bank, and without complex data transformation or migration methodologies. With intelligent data virtualization, you can quickly and easily empower your data analysts and business users to derive the competitive insights they need to support the business—and not get left behind by your data-driven peers.

We also published a white paper, Big Data & Governance: Leveraging governance to improve the value and accuracy of Big Data, which you can download here.

SHARE

WHITE PAPER