Definition

The Feature Store is a singular facility where features are stored and organized for the explicit purpose of being used to either train models (by Data Scientists) or make predictions (by applications that have a trained model). It is a central location where you can either create or update groups of features created from multiple different data sources, or create and update new datasets from those feature groups for training models or for use in applications that do not want to compute the features but just retrieve them when it needs them to make predictions.

Features are “any measurable input that can be used in a predictive model”.

As “fuel for AI systems”, features are used to train ML models and make predictions. The issue with predictions is that they require a lot of data or features. The more data, the better the predictions.

Analytical models also need to be organized in order to make sense; the data for the features needs to be pulled from somewhere (a data source) and the features need to be stored after being computed (feature engineering – transforming the source data into features) for the ML pipeline to be able to use the features.

Machine Learning, in general, requires ready-made datasets of features to train models correctly. When we say datasets, we mean that the features are typically accessed as files in a file system (you can also read features directly as Dataframes from the Feature Store). Feature pipelines are similar to data pipelines, but instead of the output being rows in a table, the output is data that has been aggregated, validated, and transformed into a format that is suitable for input to a model.

Purpose

The purpose of a Feature Store is to enable data scientists to create advanced analytics models (e.g predictive, prescriptive, simulation, optimization) with greater speed, scale and efficiency, by being able to access, create, use and re-use features to create advanced analytics models.

Key Capabilities of a Feature Store

- High throughput batch API for creating point-in-time correct training data and retrieving features for batch predictions, a low latency serving API for retrieving features for online predictions

- Consistent feature computations across the batch and serving APIs.

- Consistent Feature Engineering for Training Data and Serving

- Encourage Feature Reuse

- System support for Serving of Features

- Exploratory Data Analysis for Features

- Point-in-Time Correct Training Data (no data leakage)

- Security and Governance of Features

- Reproducibility for Training Dataset

Key Components of a Feature Store

- An offline feature store for serving large batches of features to (1) create train/test datasets and (2) use the features for training jobs and/or batch application scoring/inference jobs. These requirements are typically served at a latency of more than a minute. The offline store is generally implemented as a distributed file system (ADLS, S3, etc) or a data warehouse (Redshift, Snowflake, BigQuery, Azure Synapse, etc). For eg., in the Michelangelo Palette Feature Store by Uber, which takes inspiration from lambda architecture, it is implemented using HIVE on HDFS.

- An online feature store for serving a single row of features (a feature vector) to be used as input features for an online model for an individual prediction. These requirements are typically served at a latency of seconds or milliseconds. The online store is ideally implemented as a key-value store (eg. Redis, Mongo, Hbase, Cassandra, etc.) for fast lookups depending on the latency requirement.

- A Feature Registry for storing metadata of all the features with lineage to be used by both offline & online feature stores. It’s basically a utility to help understand all the features available in the store and information about how they were generated. Also, some feature search/recommendation capability for downstream apps/models may be implemented as part of a feature registry to enable easy discovery of features.

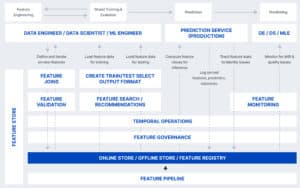

A visual depiction of how a feature store integrates into a technical architecture follows:

Primary Uses of a Feature Store

Features Stores are used to increase organizational capabilities to use data to improve understanding, learning, answering business questions, planning, deciding and taking action with greater speed, clarity, confidence and alignment / interpretation.

Features stores unify the operational data on one side and the analytical data on the other.

Key Business Benefits of a Feature Stores

The main benefits of Feature Stores are greater speed, consistency, effectiveness and productivity to develop advanced analytics models, including using machine learning methods.

Another great reason for integrating a feature store into the data technology infrastructure is to ensure that many different personas communicate by speaking the same ‘data language’. Data engineers typically take responsibility for the analytical data and have to get that data to the feature store with the help of data scientists, so they have to build these feature pipelines together with data scientists.

Data scientists can build additional feature pipelines, re-write features, use the features to train models and ML engineers are usually responsible for putting these models in production. Most of the time these personas write in different coding languages which makes the feature store a unified platform that helps them collaborate and work together.

Common Roles and Responsibilities Associated with Feature Stores

Roles important to Feature Stores are as follows:

Data Scientist – Data Scientists are responsible for developing advanced analytics models, including using machine learning techniques. Data scientists are the main user of feature stores.

Data Analyst / Business Analyst – Often a business analyst or more recently, data analyst are responsible for defining the uses and use cases of the data, as well as providing design input to data structure, particularly metrics, business questions / queries and outputs (reports and analyses) intended to be performed and improved. Responsibilities also include owning the roadmap for how data is going to be enhanced to address additional business questions and existing insights gaps.

Common Business Processes associated with feature stores

The process for developing and deploying Feature Stores is as follows:

- Data Validation

A feature store should support defining data validation rules for checking data sanity. These are but are not limited to, checking values are within a valid range, checking values are unique/not null, and checking descriptive statistics are within defined ranges. Examples of validation tools that can be integrated into a Feature Store are Great Expectations (any environment), TFX DataValidation (deep learning environment), Deequi (big data environment).

- Features Joins

It is required to reuse features in different train/test datasets for different models. Mostly implemented in the offline store and not the online store as it’s an expensive process.

- Creation of Train/Test Datasets

Data scientists should be able to use the feature store to query, explore, transform and join features to generate train/test datasets used with a data versioning system.

- Output Data Format

A feature store should provide the option for data scientists to output data in a format suitable for ML modeling (e.g., TFRecord for TensorFlow, NPY for PyTorch). Data warehouses/SQL currently lack this capability. This functionality will save data scientists an additional step in their training pipeline.

- Temporal Operations

Automatic feature versioning across defined time granularity (every second, hour, day, week, etc) will let data scientists query features as it was at a given point-in-time. This will be helpful under the following circumstances:

- Feature Visualization

A feature store should enable out-of-the-box feature data visualization to see data distributions, relationships between features, and aggregate statistics (min, max, avg, unique categories, missing values, etc). This will help the data scientist to get quick insights on the features and make better decisions about using certain features.

- Feature Pipeline

A feature store should have the option to define feature pipelines that have timed triggers to transform and aggregate the input data from different sources before storing them in a feature store. Such feature pipelines (that typically run when new data arrives) can run at a different cadence to training pipelines.

- Feature Monitoring

A feature store should enable monitoring of features to identify feature drift or data drift. Statistics can be computed on live production data and compared with a previous version in the feature store to track training-serving skew. E.g. if the average or standard deviation of a feature changes considerably from production/live data to training data stored on the feature store, then it may be prudent to re-train the models.

- Feature Search/Recommendation

- A feature store should index all features with their metadata and make it available for easy query retrieval by users (if possible, natural language-based). This will ensure features don’t get lost in a heap and promote their reusability. This is generally done by the Feature Registry (as mentioned in the earlier section).

- Furthermore, feature stores can enhance the visibility of existing features by recommending possible features based on certain attributes and metadata of already included features in a project. This equips junior-mid-level data scientists with insights that are generally available with senior data scientists with experience and thus enhances the overall efficiency of the data science team.

- Feature Governance

- Without proper governance, a feature store can quickly become a feature lab or worse a feature swamp. The governance strategy impacts the workflows and decision-making of various teams working with the feature store. Feature store governance includes aspects like,

- Implementing access control to decide who gets access to work on which features

- Identifying feature ownership i.e. responsibility for maintaining and update features with someone

- Limiting the feature type which can be used to train a particular type of model.

- Improving the trust & confidence in feature data by auditing a model outcome to check for bias/ethics

- Maintaining transparency by utilizing feature lineage to track the source of feature, how it was generated, and how it ultimately flows into downstream reporting and analytics within the business

- Understand the internal policies and regulatory requirements as well as external compliance requirements that apply to the feature data and protect it appropriately

A visual summary of the capabilities that can be used from a feature store are provided below.

A visual depiction of a typical feature store having the 3 major components mentioned above (offline, online, feature registry).

Common Technologies Associated with a Feature Store

Technologies involved with the feature store are as follows:

- Data Products – Data ProducEs are a self-contained dataset that includes all elements of the process required to transform the data into a published set of insights. For a Business Intelligence use case, the elements are data set creation, data model / semantic model and published results, including reports, analyses that may be delivered via spreadsheets or BI application.

- Data Preparation – Data preparation involves enhancing it and aggregating it to make it ready for analysis, including to address a specific set of business questions.

- Data Querying – Technologies called Online Analytical Processing (OLAP) are used to automate data querying, which involves making requests for slices of data from a database. Queries can also be made using standardized languages or protocols such as SQL. Queries take data as an input and deliver a smaller subset of the data in a summarized form for reporting and analysis, including interpretation and presentation by analysts for decision-makers and action-takers.

- Data Catalog – These applications make it easier to record and manage access to data, including at the source and dataset (e.g. data product) level.

- Semantic Layer – Semantic layer applications enable the development of a logical and physical data model for use by OLAP-based business intelligence and analytics applications. The Semantic Layer supports data governance by enabling management of all data used to create reports and analyses, as well as all data generated for those reports and analyses, thus enabling governance of the output / usage aspects of input data.

- Data Governance Tools – These tools automate the management of access to and usage of data. They can also be used to manage compliance by searching across data to determine if the format and structure of the data being stored complies with policies..

- Business Intelligence (BI) Tools – These tools automate the OLAP queries, making it easier for data analysts and business-oriented users to create reports and analyses without having to involve IT / technical resources.

- Visualization tools – Visualizations are typically available within the BI tools and are also available as standalone applications and as libraries, including open source.

- Automation – Strong emphasis is placed on automated all aspects of the process for developing and delivering integrated data sets from hybrid-cloud environments.

Trends / Outlook of Feature Stores

Key trends for the Feature Store are as follows:

Semantic Layer – The semantic layer is a common, consistent representation of the data used for business intelligence used for reporting and analysis, as well as for analytics. The semantic layer is important, because it creates a common consistent way to define data in multidimensional form to ensure that queries made from and across multiple applications, including multiple business intelligence tools, can be done through one common definition, rather than having to create the data models and definitions within each tool, thus ensuring consistency and efficiency, including cost savings as well as the opportunity to improve query speed / performance.

Automation – Increase emphasis is being placed by vendors on ease of use and automation to increase ability to scale data governance management and monitoring. This includes offering “drag and drop” interfaces to execute data-related permissions and usage management.

Observability – Recently, a host of new vendors are offering services referred to as “data observability”. Data observability is the practice of monitoring the data to understand how it is changing and being consumed. This trend, often called “dataops” closely mirrors the trend in software development called “devops” to track how applications are performing and being used to understand, anticipate and address performance gaps and improve areas proactively vs reactively.

AtScale and Feature Stores

AtScale’s semantic layer improves features implementation by enabling faster feature creation, including rapid data modeling for AI and BI, including performance via automated query optimization. The Semantic Layer enables development of a unified business-driven data model that defines what data can be used, including supporting specific queries that generate data for visualization. This enables ease of tracking and auditing, and ensures that all aspects of how data are defined, queried and rendered across multiple dimensions, entities, attributes and metrics, including the source data and queries made to develop output for reporting, analysis and analytics are known and tracked.

SHARE

Guide: How to Choose a Semantic Layer