The Data Dilemma for AutoML

AutoML platforms have revolutionized data science by making simplifying AI/ML model discovery and creation. Leading organizations are racing to get value from their enterprise AI investments and turning to this new class of technologies to accelerate their initiatives.

Enterprise AI runs on data and poses a new set of challenges for data teams already under pressure to satisfy needs of traditional business intelligence and analytics workloads. Not only do AutoML platforms consume massive amounts of data, they also generate new data that needs to managed and delivered to the hands of decision makers.

The Solution

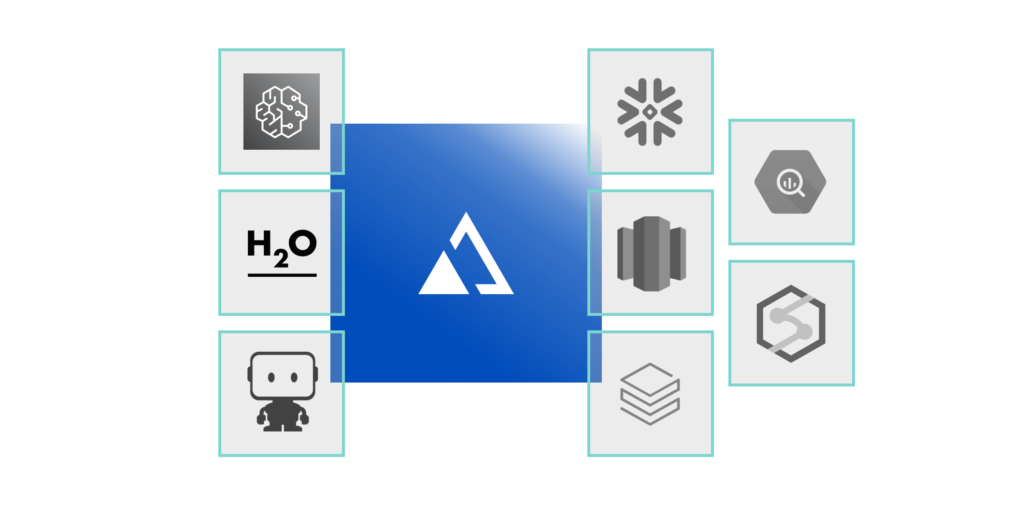

AtScale’s semantic layer platform delivers data to AutoML platforms – simplifying pipelines and supporting feature discovery. Data teams define views of live cloud data that are optimized for model ingest including creation of calculated metrics, time- relative metrics, and custom dimensions. By employing virtualized views of data, data movement and ETL are minimized. Furthermore, pipelines are protected form changes to underlying data.

The semantic layer can also provide a path for publishing model results back to the business for consumption in existing dashboards and reports.

AtScale helps data teams simplify and harden ML data pipelines while providing a path to publish model outputs back to the business.