When AtScale implements support for back end EDW’s, we spend a lot of time—the majority, actually, working on implementing features that take advantage of all the unique capabilities the EDW has to offer. This probably comes from our genesis in Hadoop, where every single micro-optimization is required to get interactive querying and business intelligence to work. We carry that philosophy forward as we support new and innovative cloud databases.

So while others may have 50+ integrations with data sources, AtScale has about a dozen that work really freaking well. It’s not good enough to see a table in a data repository, but rather how can we also maximize the data platform’s native capabilities as well.

So recently while we were developing support for Snowflake one of my architects working on the project said: “Hey Matt, this autoscaling data warehouse feature in snowflake lines up perfectly with the right-engine-for-the-job feature we implemented for Hadoop.” In Hadoop, we found that each of the SQL-On-Hadoop engines had different performance characteristics: Hive is exceptional for very large queries, not as great for small queries (until LLAP), Impala and Spark are great for small queries, Impala & Presto have wonderful concurrency characteristics, etc.

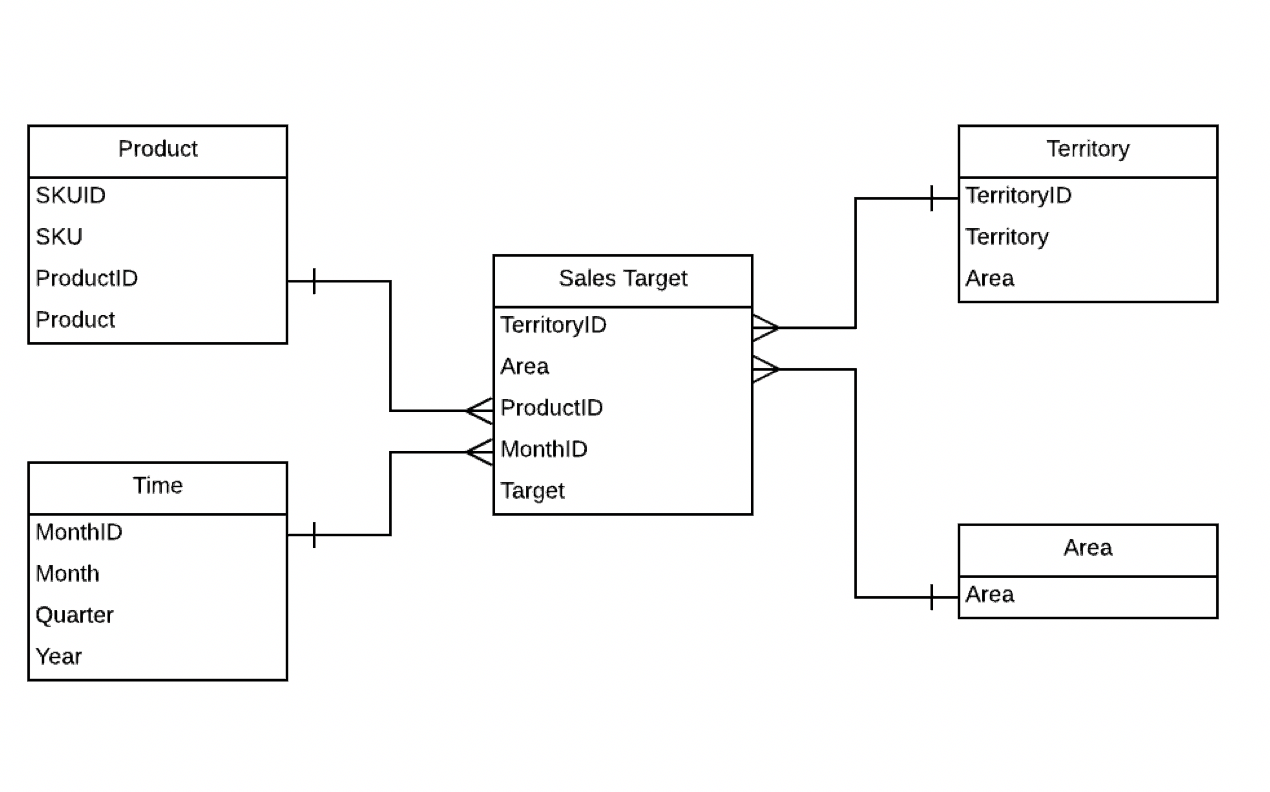

It’s not exactly the same for Snowflake, but the dots that we connected were: we can define multiple Snowflake data warehouses of different sizes (small and large) and point to the same data (wonderful feature Snowflake devs!). AtScale can be configured to use the large (more expensive, more coarse-grained scaling) warehouse to build aggregations and the smaller more granular warehouse(s) to elastically grow and shrink with the less predictable, more bursty interactive user queries. Thus we get the advantage of fast summary table builds, and the ability to scale very closely in response to user demand. This not only meets aggregate build and BI performance SLAs, however, Snowflake customers can also add more predictability to their investment. This means the business is happy, and the CFO is happy.

Once the aggregate builds are done, Snowflake will auto-suspend the large data warehouse thus controlling costs. And for those interactive queries, AtScale helps you further define multiple engines for different BI workloads, that might not auto-suspend to auto scale, but you can control the type of compute power is necessary and not just one size fits all.

As I said in my prior blog on cost control, scaling user demand with large units results in over-procurement of resources and thus wasted dollars.

This is great for the Snowflake Admin, but what about the BI folks?

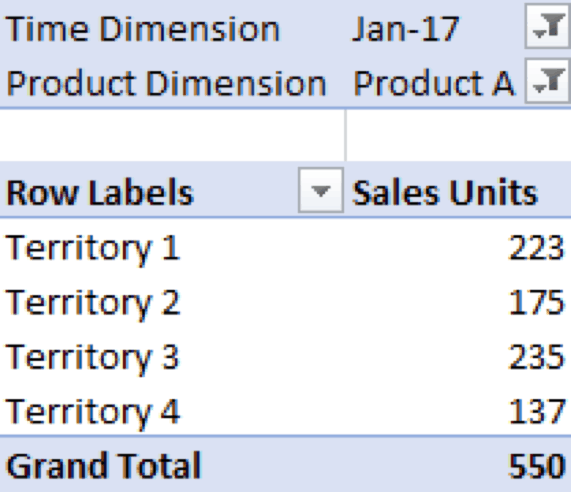

The business intelligence world is wide. It includes everything from canned reports to machine learning algorithms. The BI tools that developed along the way either choose to support SQL or MDX (some tools can use both). What this means is that for SQL-based tools, the query language itself assumes the need to stitch data together (JOINs, UNIONs, etc) and ultimately give the information consumer a series of tables with a lot of columns. MDX-based tools are concerned more about data consumption. The way you present data (hierarchies, attributes, measures, categories, etc) is not just a view of the data, but it also dictates how you interact with the data. Both approaches have its merits and both have democratized data. Databases like Snowflake understands SQL, but not MDX. So what do you do if your user community wants to use an Excel pivot table, PowerBI with drill downs, or other popular MDX tools to Snowflake?

{Stage Left: Enter AtScale}

The delivery of data is critical for the adoption of BI assets of course, but it also paves the way for the adoption of modern data platforms. That delivery has to have business logic, has to be secured, has to serve all BI users and has to be fast.

Snowflake also introduced new ways to deal with Big Data types like JSON arrays (VARIANT data type). AtScale allows you to take advantage of this by passing Snowflake SQL extensions when preparing tables in AtScale.

It’s a marriage made in heaven (or in the cloud). When you decide to move information assets to the cloud with Snowflake, choose AtScale to deliver that data to your consumer. And in this way, the move to the cloud is innovative, secured and quickly adopted.

SHARE

Power BI/Fabric Benchmarks