September 10, 2019

5 Essential Tips for Successful Cloud Migration (and how to avoid the “Gotchas”)

In order to be successful, data driven organizations must analyze large data sets. This task requires computing capacity and resources that can vary in size depending on the kind of analysis or the amount of data being generated and consumed. Storing large data sets carries high installation and operational costs. Cloud computing, with its common pay-per-use pricing model and ability to scale based on demand, is the most suitable candidate for big data workloads by easily delivering elastic compute and storage capability.

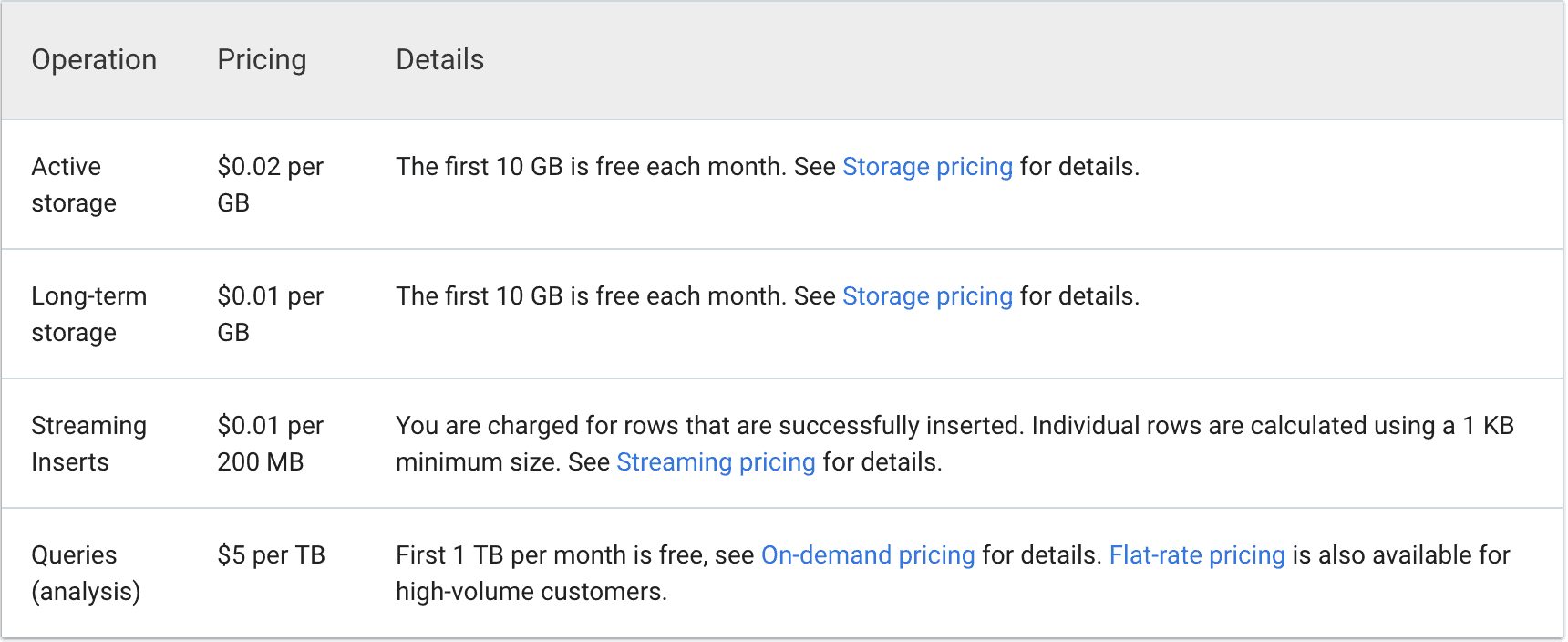

Google BigQuery’s ability to support analytics over petabyte-scale data on a serverless enterprise data warehouse architecture allows BI users to cost-effectively address the most common data challenges by reducing reliance on large onsite infrastructure investments. GBQ’s pay-as-you-go model enables the user to pay for only the services used without requiring upfront costs or termination fees. This model is based on three different factors:

- The amount of storage — $.01 to $.02 per GB/month, depending on whether data is “active storage” or “long-term storage.”

- Streaming inserts — $.01 per 200GB

- Querying Data – $5/TB

Although the pay-as-you-go model means Google BigQuery users can consume big data services without incurring large capital expenses, costs can rapidly increase when the amount of data starts to grow. As a result, delivering interactive performance for BI users while keeping costs low is a major challenge when developing self-service big data analytics in the cloud.

AtScale solves this challenge by creating, managing, and optimizing aggregate tables through machine learning. These aggregate tables contain measures from one or more fact tables and include aggregated values for these measures. The aggregation of the data is at the level of one or more dimensional attributes, or, if no dimensional attributes are included, the aggregated data is a total of the values for the included measures.

AtScale’s aggregates reduce the number of rows that a query has to scan to obtain the results needed for a report or dashboard. By doing this, the length of time needed to produce the results will be dramatically reduced. This results in reduced latency, as well as a significant reduction in cloud resource consumption, translating into cost savings.

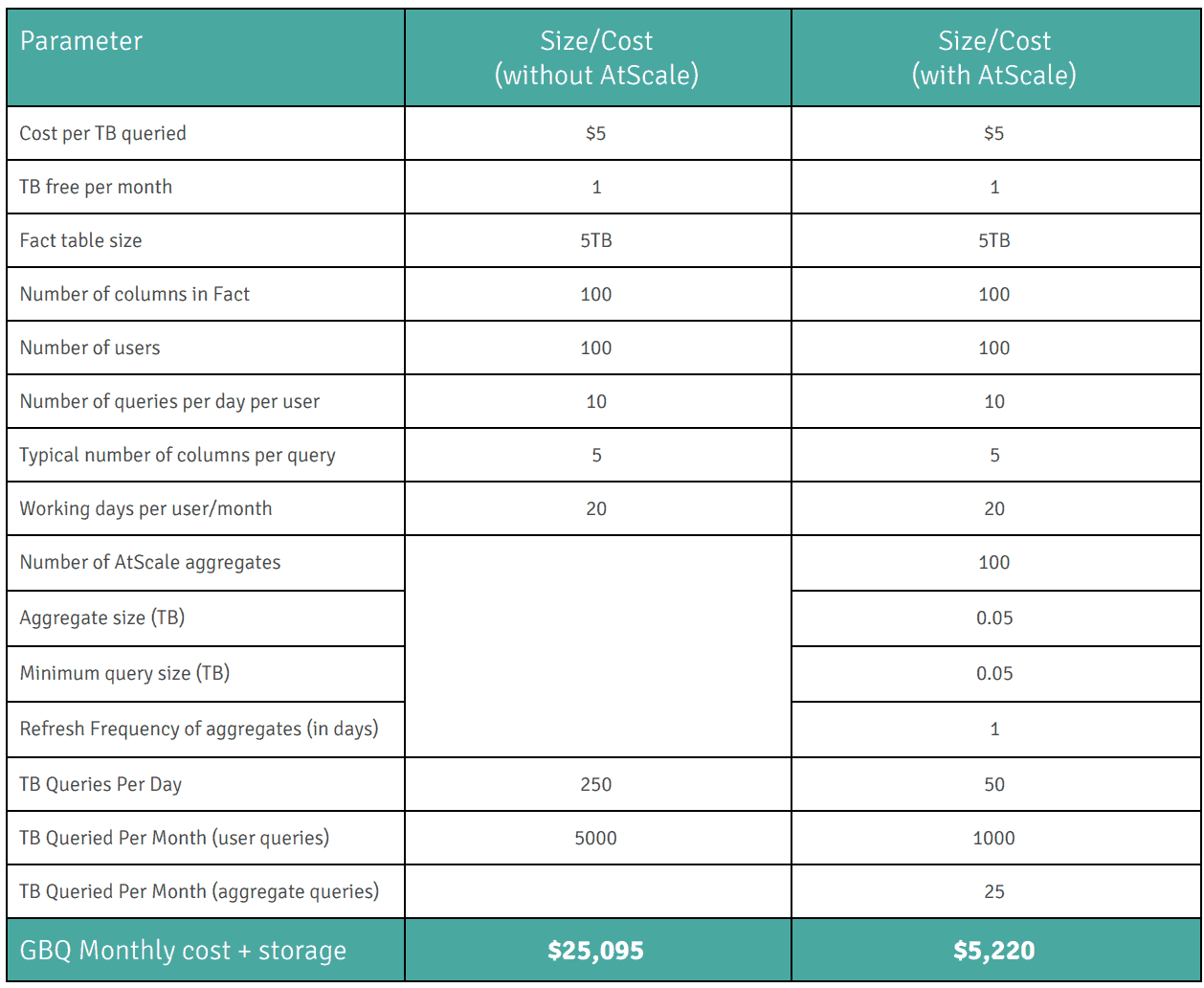

AtScale will make use of the aggregates stored on Google BigQuery to ensure subsequent retrieval access is very fast and carries the cost of a very small footprint. Once the aggregate table has been built, subsequent access to raw data tables is avoided and the size of the Query Data Usage will be reduced. To follow-up on the cost analysis shown earlier, the following calculations demonstrate how AtScale helps reduce the cost of data processing on GBQ. In order to provide a fair comparison, extra parameters will be included in the calculation.

Implementing AtScale with Google BigQuery results in considerably large savings, as BI queries are no longer querying data directly from raw fact tables. Instead, queries are being executed against AtScale aggregate tables, which obtain the same results at a fraction of the cost.

BENCHMARK REPORTS