March 6, 2019

TECH TALK: AtScale 6.0 brings Universal Semantic Layer Benefits to Google Cloud

The average data platform holds raw data assets from several different locations — BI apps, data warehouses, reports, etc. This becomes a challenge when teams need an effective way to unite and leverage this entire ecosystem for intelligent decisions and business growth. Without some way to unite these siloed places, it’s impossible for an organization to get a complete picture of its data.

The “modern data stack” addresses this challenge by introducing technology and processes that connect cloud-managed data to the users who need it. And within this modern data stack, semantic layers start to arise as a way to translate raw data into business-ready analytics. By semantic layer, we mean some kind of common ground for discussing and interpreting data.

While some types of semantic layers naturally form as humans interact with data, others are deployed solutions that consolidate specific data views. These semantic layer offerings aim to make it easier for all personnel — both data experts and non-experts — to leverage data. But some types of semantic layers work far better than others.

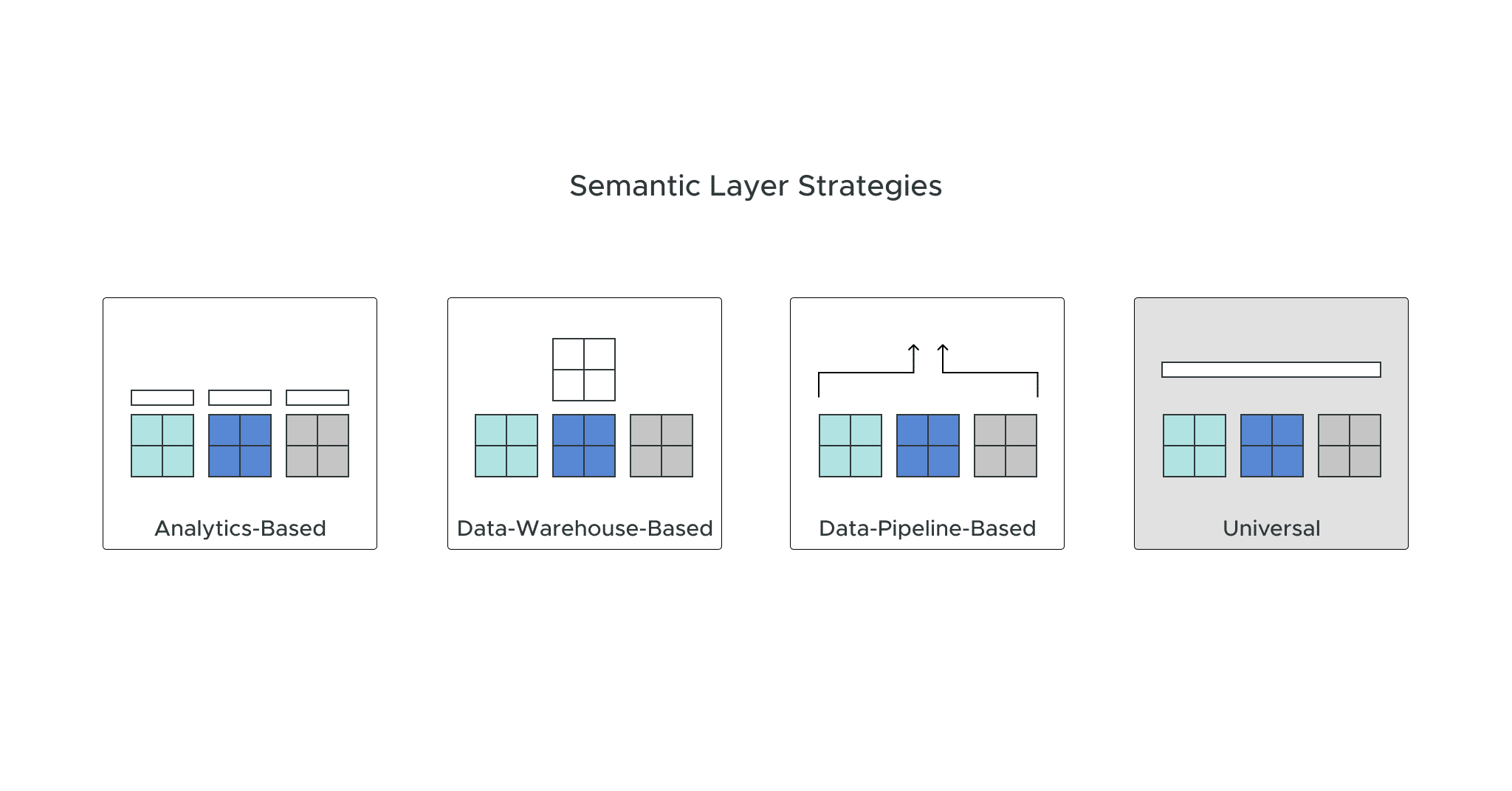

In this blog post, we’ll take a look at four types of semantic layers, including:

- Analytics-based

- Data-warehouse-based

- Data-pipeline-based

- Universal

Analytics-Based Semantic Layers

Separate Semantic Layers for Each Analytics Tool

When analytics tools like dashboards, reports, ad hoc spreadsheets, and embedded visualizations arose, they needed a way to display data interoperably. So, each analytics tool introduced some type of semantic layer: a standardized way to define and discuss key data concepts within the tool. These specialized semantic layers within the business’s existing data stack help them start discussing data in practical, actionable terms.

Which Orgs Should Rely on an Analytics-Based Semantic Layer?

Analytics-based semantic layers work well for companies using only one or two different analytics tools. This situation would be the exception rather than the rule, as the average modern organization uses several analytics tools: BI tools (e.g., Power BI or Tableau) for managing dashboards, a spreadsheet like Excel for financial analysis, Jupyter notebooks for data science functions, and more.

Challenges of an Analytics-Based Semantic Layer

If an organization uses more than one or two analytics tools, using a separate semantic layer within every analytics tool leads to issues. Each tool uses different definitions and contexts, causing significant discrepancies across the organization (a phenomenon called semantic sprawl).

This semantic sprawl makes teams more siloed and isolated from each other. For example, the financial and data science teams might create separate definitions for the same function in their favorite analytics tools. Then, if the organization needed to compile info about this function from across the company, it would be a tough (if not impossible) task to accomplish. This variance in definition and context ultimately slows down data-driven innovation and causes confusion.

Data-Warehouse-Based Semantic Layers

Data Marts for Centralized, Business-Oriented Views of Data

Data warehouses generally store raw data. So, teams need added functionality to find and use the data practically. To enable actionable analytics, these warehouses provide data marts, a type of semantic layer. Data marts turn raw data into “business-ready” analytics by creating centralized, business-oriented views of the data.

Which Orgs Should Rely on a Data-Warehouse-Based Semantic Layer?

Theoretically, data marts could be a business’s main semantic layer in the following situations:

- The org has several analytics consumption tools. In this case, they use multiple BI tools or AI/ML platforms to create competing business metrics versions. Because of this, business users must model data in their analytics tools before they can build their dashboards, and need deep knowledge of SQL and data warehouses. We can also assume that a business in this situation isn’t actively working towards a self-service analytics model.

- The org relies on a central IT or data team to create data products. They are not working towards a data mesh or hub-and-spoke approach. Instead, a centralized team owns most data product creation activities.

- The org has large data (more than 20m rows of data), and data latency and completeness are priorities. In this case, the org realizes that data extracts or imports can’t scale to handle the complexity or size of their data. They also realize that updating extracts and data silos is too costly, time-consuming, and risky, as it requires access to unaggregated data. Also, this org likely prioritizes data latency (refreshness) over speed/agility.

- The org distrusts analytics. Because of this, the org needs a single source of truth to represent business metrics consistently, regardless of the data source or consumption tool. They must leverage centralized semantic models built directly into their warehouses to foster trust in analytics.

Challenges of a Data-Warehouse-Based Semantic Layer

If a business doesn’t fall under any of those three categories and wants to facilitate self-service analytics instead, a data-warehouse-based semantic layer isn’t a good fit. The structure of a data-warehouse-based semantic layer means that definitions become outdated quickly and can’t keep up with the domain-specific needs of different workgroups. In addition, cloud-scale tables are massive, making it impossible to find a query engine that will keep up with this scale at an actionable pace.

In an organization that prioritizes self-service analytics, users often get frustrated with these slowdowns. And to shortcut the process and get faster results, they extract data into analytics platforms and manipulate the data there instead. It leads to the same challenge: semantic sprawl.

Data-Pipeline-Based Semantic Layers

Transformation Within the Pipeline to Create Business Definitions

Some businesses leverage a transformation phase within their data pipeline to create better data definitions and support analytics customers. Data engineers enable this phase by encoding semantic meaning inside the pipelines.

Which Orgs Should Enable a Data-Pipeline-Based Semantic Layer?

Some orgs could benefit from a data-pipeline-based semantic layer. It ideally works if the data engineering team is small and centrally organized and if the data consumers primarily use canned dashboards. In addition, the org’s data must:

- Be relatively static — it doesn’t change much in its shape or frequency for a team.

- Either have a low degree of dimensionality (not many unique data elements) or have dimensionality that rarely changes.

- Be small. More specifically, data extracts must be feasible without compromising data recency SLAs.

Challenges of a Data-Pipeline-Based Semantic Layer

While a data pipeline approach might work better than just creating definitions inside an analytics tool or data warehouse, it won’t work for some organizations.

Encoding semantic meanings into every pipeline is a very involved process. It especially becomes cumbersome in a fast-moving cloud ecosystem. Data engineers must recreate common business concepts whenever they design a new pipeline. And these pipelines eventually become inconsistent with each other… again leading to semantic sprawl!

Universal Semantic Layers

A Standalone Layer for Facilitating a Common Language Across the Org

A universal semantic layer is a fourth approach to creating a “common data language” for all business users to leverage. Rather than embedding the semantic layer into pre-existing processes or tools, this approach adds a new layer between the data platform and the analysis and output services. This thin, logical layer plays one role in the data transformation process: taking raw data assets from multiple sources and turning them into business-oriented metrics and analysis frameworks.

How a Universal Semantic Layer Differs from Other Approaches

Unlike other semantic layers, a universal layer enables users to work within their preferred analytics tools without sacrificing consistency or speed. It’s an interoperable approach for multiple systems and teams that work with the data and need to communicate with each other.

By adding this extra layer to a data stack, users see benefits like:

- A single source of truth for enterprise metrics and hierarchical dimensions, accessible from any analytics tool.

- Agility for quickly updating metrics or defining new ones, designing domain-specific views of data, and incorporating new raw data assets.

- Optimized analytics performance with cloud resource efficiency.

- Easily-enforceable governance policies related to access control, definitions, performance, and resource consumption.

Discover More About Semantic Layers

AtScale’s universal semantic layer platform helps organizations establish a culture of data literacy by making data speak the language of business. We provide centralized language for the decentralized creation and use of data products, helping organizations fully democratize data.

Unlike other approaches, which are specific to certain tools or processes, AtScale’s universal semantic layer takes data from all tools, processes, and business units into consideration, turning all dimensions and measures into the same definitions across the board. Ultimately, we help modern organizations thrive in today’s data-centric world.

Still curious about the technology behind semantic layers? Download our whitepaper, Why Semantics Matter in the Modern Data Stack, to delve into these four types of semantic layers, the implementation process of a universal semantic layer, and more!

ANALYST REPORT