March 4, 2019

Two Ways That AtScale Delivers Faster Time To Insight: Dynamic BI Modeling and Improving Query Concurrency

AtScale recently announced Python-based Metrics Engineering as a new capability supported within AI-Link. AI-Link is an extension of the AtScale semantic layer platform that leverages a robust python-based API for connecting data science notebooks and AI/ML platforms to AtScale. AI-Link now includes the capability to programmatically create, update, and delete metrics within the semantic layer using python. This capability, that we refer to as Metrics Engineering, can radically simplify management of large metrics stores. Python-based Metrics Engineering is targeted toward Analytics Engineers, Data Scientists, and other “code-first” teams.

The Challenge of Managing Metrics Stores

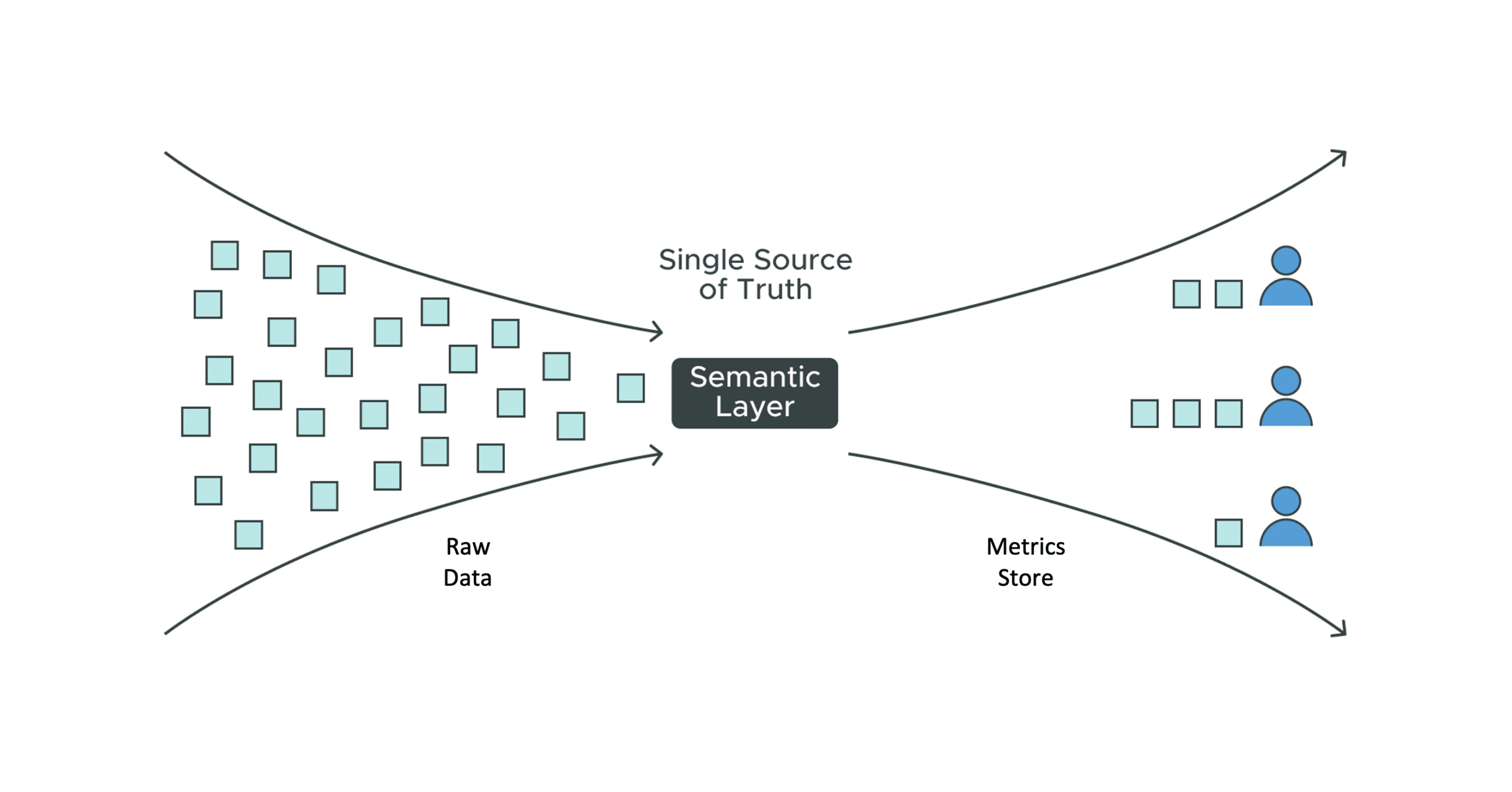

According to Gartner, Inc in a September 2022 research report, Innovation Insight: Metrics Store, “Metrics stores, which play as a stand-alone layer sitting between data warehouses and downstream analytics tools, attract D&A leaders’ attention for their ability to drive metrics standardization. They aim to decentralize and democratize the ability to build and publish enterprise wide metrics, reducing the repetitive work with the ‘build once, use many’ vision.”

In Dave Mariani’s paper on the semantic layer within the modern data stack, he positions the metrics store (aka the metrics layer) as one of the four fundamental services comprising a robust semantic layer – the other three being data modeling, workflow orchestration, and entitlement and security management). Metrics stores act as the repository of pre-defined metrics definitions that can be served to analytics layer tools including business intelligence applications, data apps, and data science tools. Metrics stores within an AtScale-managed semantic layer are logical definitions that are materialized and served at the time of user query (vs. a pre-staged repository of pre-computed values). Metrics stores are directly analogous to the concept of a feature store familiar to data scientists. We have written extensively on using AtScale to build feature stores and how to integrate AtScale with feature store platforms. On a related topic, we have written on the power of using AtScale’s powerful data modeling engine to simplify feature engineering for data scientists.

With the rise of the analytics engineer and more progressive enterprise data teams thinking about aligning business intelligence with data scientist teams, the importance of a robust management approach for metrics stores is becoming critical.

There are a wide range of business metrics managed within metrics stores including:

Simple Quantitative Metrics are based on measures like ship quantity, revenue, and cost are produced by counts or sums of values within specific fields pulled from source data pulled from tables based on raw application data (e.g. CRM, ERP). Governance of how these metrics are properly pulled from which tables is key – the metrics store becomes the single source of truth for key business metrics. Quantitative metrics can be aggregated with sums or with statistical summaries like an average. The metric definition may include rules around what level of aggregation across which dimensions are appropriate.

Calculated Metrics are created with calculations executed as part of the metric definition. For instance, a Gross Margin metric may be calculated as (revenue – cost) / revenue. The metrics store is the place to maintain consistency of both the underlying quantitative metric and the calculation. Calculated metrics can typically be aggregated in a similar manner to simple quantitative metrics.

Time Relative Metrics incorporate quantitative (or calculated) metrics with a defined relationship to a time dimension. For instance percent change in revenue from one year to the next is a time relative metric. Or a rolling 3 month average sales quantity is another type of time relative metric. Time relatives metrics are commonly used as data science features, but are also commonly used in business intelligence or data applications.

Categorical Metrics represent discrete values that don’t have an ordered relationship. For instance, colors or breeds of dogs can be used as categorical metrics.

Ordinal metrics are similar to categorical metrics but the categories can be ordered. For instance low, medium, high; or poor, middle class, wealthy are examples of ordinal metrics.

Single, centralized metrics stores are fundamental to building a scalable semantic layer strategy. But managing large metrics stores can become complicated. Even well governed programs, where the creation of new metrics is carefully monitored, can face metrics sprawl where the sheer number of metrics (all valid representations of data) become unwieldy to manage.

Let’s consider a simple example: I work at a large automotive company and I want to measure ‘profitability’. I want to start looking at profit by dealership, by class, by month, and by year across a variety of accounts and codes. I have my metrics defined in Excel, however my counterparts in sales and supply chain logistics are using different tools like Tableau and PowerBI. I define a range of metrics: for instance, I calculate profit and then build month-to-date (MTD), year-to-date (YTD), avg month-to-date (AVG MTD), and finally average year-to-date (AVG YTD) for my financial analysis roll-ups.

All of these calculated metrics will vary by region, dealership, and brand. For example, different dealerships may use different financial incentives to sell their vehicles which need to be factored into the calculation for net revenue or profit – two critical KPIs. Seasonality matters too, and I may have to change these calculations as new offers and incentives are introduced, every 3 to 6 months.

The result: metric stores can have thousands of metrics and associated metric calculations depending on the complexity of their data environments, associated tooling, and business objectives. Furthermore, these calculations can be complex based on the formulas required to compute their values. If these definitions change periodically, updating 1000s of metrics can be cumbersome and introduce risk in decisioning by using inaccurate or outdated metrics.

What is Metrics Engineering?

Management of how metrics are defined, created, updated, viewed, and deleted becomes critical to the success of any metric store. This can only happen with the right data foundation in place. AtScale includes a powerful data modeling platform that lets organizations build logical representations of source data assets and define key metrics. We just announced AtScale Modeling Language as a code-first complementary data modeling approach to our visual modeling canvas.

Python based metrics engineering delivers an additional tool for managing large metrics stores through python scripts. With a semantic layer backed metric store, data engineers, analytics engineers, and data scientists can now ‘engineer’ and programmatically manage their metrics via AtScale’s python library available in AI-Link. Developers can immediately manipulate metrics, calculated metrics, and even categorical features that can be created in ML/AI pipelines. They can do this with ease with AtScale ‘python helper functions’ consumable in their native python environments.

How Metrics Engineering Supports Analytics Engineers and Data Scientists

This new metrics engineering capability supports analytics engineers and data scientists in three important ways:

- Improve productivity by simplifying metric lifecycle management. Analytics engineers and data scientist skills are in high demand. Lack of resources is a key impediment for many organizations to more proactively gain value from their data and AI investments. Metrics engineering can save massive amounts of time by automating batch updates to metrics that would otherwise need to be manually curated.

- Simplify creation of more complex measures (eg. time-relative) that can improve business decisioning. It is often cited that data scientists spend 80% of their time feature engineering. As enterprise data teams mature and strive to deliver a richer set of metrics to their audiences, they are coming up against this same reality. Metrics engineering simplifies creation of complex measures – again saving resource time and making analytics engineers more productive.

- Metrics engineering opens up new avenues for creating richer business-ready metric calculations that are augmented by AI/ML (error rates, key drivers, predicted vs actuals). This capability opens the possibility to distribute a new class of AI-augmented business metrics through existing business intelligence infrastructure (i.e. dashboards and reports) reaching a much broader business audience.

We are excited to bring metrics engineering to the AtScale community and are looking forward to co-innovating with our customers on the potential.

RESEARCH REPORT