September 17, 2019

ETL vs ELT: What’s the Difference? & How to Choose

One of my favorite parts of my job at AtScale is spending time with customers and prospects. More specifically, I enjoy learning what matters most to them as they move to modern data architecture.

I’ve noticed six main themes that keep coming up during these discussions. They appear in all industries, use cases, and geographies. So, I’ve come to think of them as the fundamental principles of modern data analytics architecture.

-

- View data as a shared asset.

- Provide user interfaces for consuming data.

- Ensure security and access controls.

- Establish a common vocabulary.

- Curate the data.

- Eliminate data copies and movement.

Whether responsible for the data, systems, analysis, strategy, or results, you can use these six principles to navigate the fast-paced modern world of data and decisions. Think of them as a foundation for data architecture, allowing your business to run at an optimized level — today and tomorrow.

1. View Data as a Shared Asset.

Enterprises that start with a vision of data as a shared asset outperform their competition. Instead of allowing departmental data silos to persist, these enterprises ensure that all stakeholders have a complete view of the company.

By “complete,” I mean a 360-degree view of customer insights and the ability to correlate valuable data signals from all business functions, such as manufacturing and logistics. The result is improved corporate efficiency.

// You may also like: Using ‘Data-Sharing’ to Make Smarter Decisions at Scale //

2. Provide User Interfaces for Consuming Data.

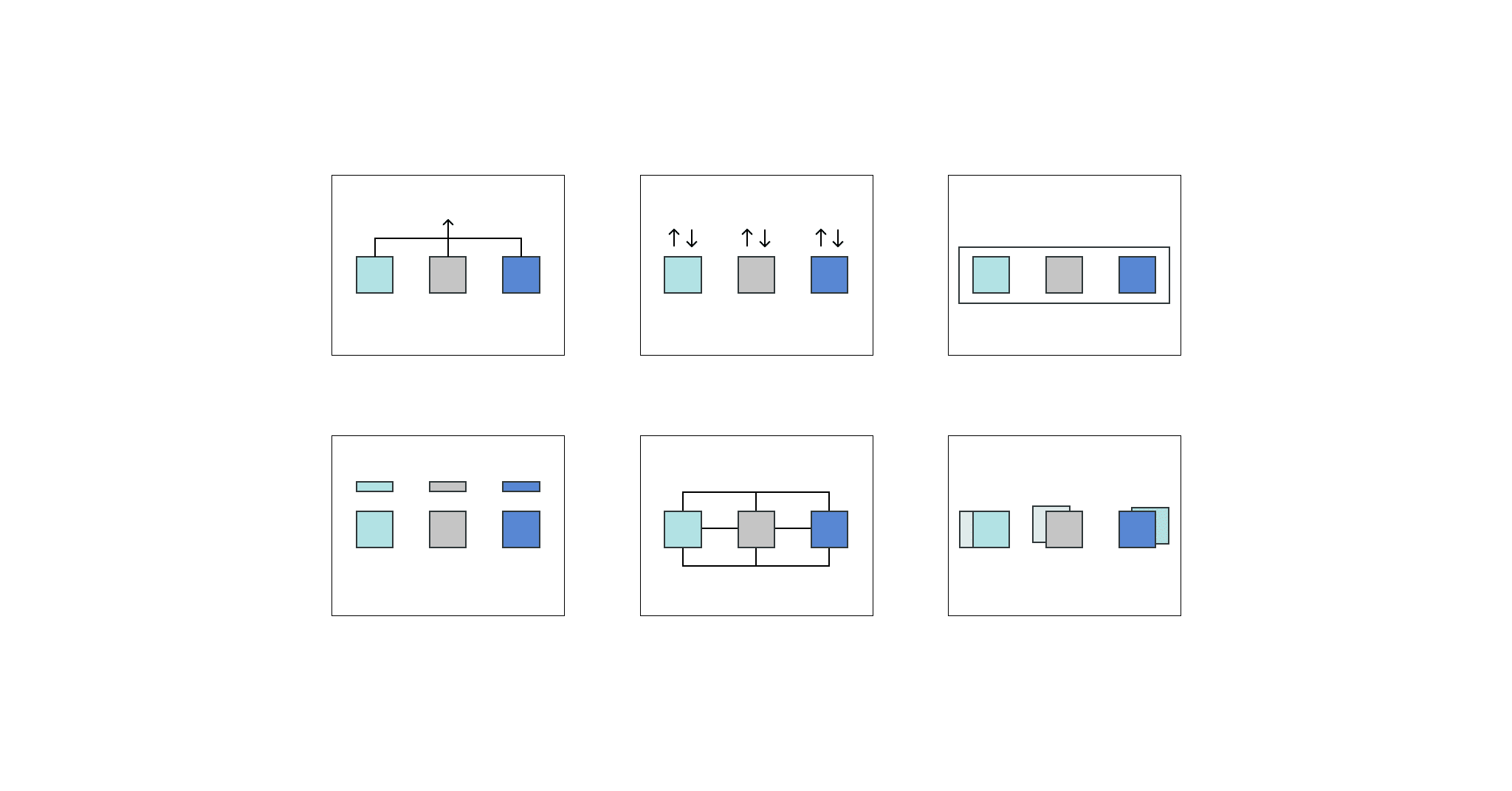

Putting data in one place isn’t enough to achieve the vision of a data-driven culture. The days of purely using a systems-based data warehouse are long gone. Modern data architecture requires enterprises to use data warehouses, data lakes, and data marts to meet scalability needs.

How do warehouses, lakes, and marts function in modern data analytics architecture? Here’s an easy breakdown:

- Data warehouses: The central location where all data is stored.

- Data lakes: A smaller repository of specific data stored in its raw format.

- Data marts: The serving layer: a simplified database focused on a particular team or line of business.

To take advantage of these structures, data must be able to move freely to and from warehouses, lakes, and marts. And for people (and systems) to benefit from a shared data asset, you must provide the interfaces that make it easy for users to consume that data.

Some businesses accomplish this with an OLAP interface for business intelligence, an SQL interface for data analysts, a real-time API for targeting systems, or the R language for data scientists.

Others take a more business-wide approach, such as deploying “data as code.”

In the end, it’s about letting your people work with the familiar tools they need to perform their jobs well.

3. Ensure Security and Access Controls.

Unified data platforms like Snowflake, Google BigQuery, Amazon Redshift, and Hadoop necessitated the enforcement of data policies and access controls directly on the raw data, instead of in a web of downstream data stores and applications. Data security projects like Apache Sentry makes this approach to unified data security a reality. Look to technologies that secure your modern data architecture and deliver broad self-service access without compromising control.

4. Establish a Common Vocabulary.

By investing in an enterprise data hub, enterprises can create a shared data asset for multiple consumers across the business. That’s the beauty of modern data analytics architectures.

However, it’s critical to ensure that users of this data analyze and understand it using a common vocabulary. Regardless of how users consume or analyze the data, you must standardize product catalogs, fiscal calendar dimensions, provider hierarchies, and KPI definitions. Without this shared vocabulary, you’ll spend more time disputing or reconciling results than driving improved performance.

Many businesses lean on a data mesh approach to facilitate this shared vocabulary. And technologies like a universal semantic layer make this methodology possible by translating raw data into business-ready data.

// You might also like: The Definition of Data Mesh: What Is It and Why Do I Need One? //

5. Curate the Data.

Curating your data is essential to a modern data analytics architecture. Time and time again, I’ve seen enterprises that have invested in Hadoop or a cloud-based data lake like Amazon S3 or Google Cloud Platform start to suffer when they allow self-serve data access to the raw data stored in these clusters.

Without proper data curation —modeling important relationships, cleansing raw data, and curating key dimensions and measures — end users can have a frustrating experience. This vastly reduces the perceived and realized value of the underlying data. By investing in core functions that perform data curation, you have a better chance of recognizing the value of the shared data asset.

// You may also like: Using Self-Service Tools To Speed Up Data Product Development //

6. Eliminate Data Copies and Movement.

Every time someone moves data, there is an impact on cost, accuracy, and time. Talk to any IT group or business user, and they all agree: the fewer times data gets moved, the better.

Cloud data platforms and distributed file systems promise a multi-structure, multi-workload environment for parallel processing massive data sets. These data platforms scale linearly as workloads, and data volumes grow. Modern enterprise data architectures eliminate the need for unnecessary movement — reducing cost, increasing “data freshness,” and optimizing overall data agility.

Regardless of your industry, role in your organization, or where you are in your big data journey, you can adopt and share these principles as you modernize your big data architecture. While the path can seem long and challenging, you could make this transformation sooner than you think with the proper framework and principles.

Ready to take the next step in your big data journey? Learn about AtScale’s approach to scaling data analytics via modern data models enables a hub-and-spoke approach to managing analytics.

NEW BOOK