Definition

Data Fabric is a framework and network–based architecture (vs point-to-point connections) architecture for delivering large, consistent, integrated data from a centralized technology infrastructure using a hybrid cloud. A data fabric is an architecture and set of data services that provide consistent capabilities across a choice of endpoints spanning hybrid multi-cloud environments. This enables an integrated data layer (fabric) to be provided from multiple data sources to support analytics, insight generation, orchestration, and applications. The Data Fabric can be viewed as the centralized technology that enables a Data Mesh.

Purpose

The purpose of the Data Fabric is to deliver large, consistent, integrated data from a centralized technology infrastructure, particularly from a cloud / multi-cloud hybrid environment. Data Fabrics are designed to deliver availability, accuracy, efficiency and consistency with cost effectiveness, and are particularly beneficial for delivering large, integrated data and tools for business intelligence and analytics, where data size, reporting and analysis needs commonality and data accuracy, availability and consistency are most important.

Key Capabilities to Consider when Implementing a Data Fabric

- Enterprise data fabric focuses on providing large, enterprise-wide integrated data sets focusing on availability, accuracy and consistency from single, integrated, automated set of data management technologies

- Data Fabric core technology infrastructure is centralized to ensure that data and applications are governed and synchronized

- Data Fabrics are designed to work in the cloud, particularly public and hybrid clouds

- Data Fabric can be a data delivery technology architecture to support a data mesh

- A data fabric utilizes continuous analytics over existing, discoverable metadata assets to support the design, deployment and utilization of integrated and reusable data across all environments, including hybrid and multi-cloud platforms.

- Data fabric leverages both human and machine capabilities to access data in place or support its consolidation where appropriate

- Data Fabric continuously identifies and connects data from disparate applications to discover unique, business-relevant relationships between the available data points

- The architecture supports faster decision-making, providing more value through rapid access and comprehension than traditional data management practices.

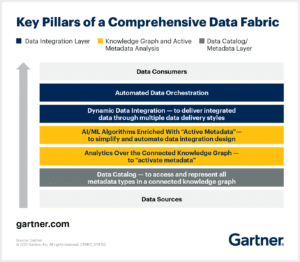

According to Gartner, key pillars of a data fabric are as follows:

Primary Uses of a Data Fabric

Data Fabric is used to increase scale, consistency and cost effectiveness of delivering large, integrated datasets from a common set of data management capabilities from a multi-cloud environment. he enterprise. The data mesh framework and architecture is most appropriate when the business domains have diverse needs in terms of the data, insights and analytics that they use, such that centralized data, reporting and analysis is not required or beneficial. The data mesh requires the following capabilities

- Singular set of common data management capabilities designed to deliver ready-to-analyzed datasets from a hybrid-cloud environment

- Centralized data governance, including centralized data catalogs and semantic layer

- Centralized IT team focused on providing and supporting conformed data availability (e.g. from data lake / data warehouse)

- Centralized IT utilizing a common set of data / data product capabilities

- Processes are consistent and automated to improve speed of data delivery

Data Fabric can be the technology to provide data to enable Data Products creation. Data Products are a self-contained dataset that includes all elements of the process required to transform the data into a published set of insights. For a Business Intelligence use case, the elements are data set creation, data model / semantic model and published results, including reports, analyses that may be delivered via spreadsheets or BI application. Examples of data products are shown below.

Key Business Benefits of Data Fabric

The main benefits of Data Fabric are increased speed, scale, availability, accuracy and consistency to deliver / make available ready-to-analyze integrated data to users.

Common Roles and Responsibilities Associated with Data Fabric

Roles important to Data Fabric are as follows:

- Insights Creators – Insights creators (e.g. data analysts) are responsible for creating insights from data and delivering them to insights consumers. Insights creators typically design the reports and analyses, and often develop them, including reviewing and validating the data. Insights creators are supported by insights enablers.

- Insights Enablers – Insights enablers (e.g. data engineers, data architects, BI engineers) are responsible for making data available to insights creators, including helping to develop the reports and dashboards used by insights consumers.

- Insights Consumers – Insights consumers (e.g. business leaders and analysts) are responsible for using insights and analyses created by insights creators to improve business performance, including through improved awareness, plans, decisions and actions.

- BI Engineer – The BI engineer is responsible for delivering business insights using OLAP methods and tools. The BI engineer works with the business and technical teams to ensure that the data is available and modeled appropriately for OLAP queries, and then builds those queries, including designing the outputs (reports, visuals, dashboards) typically using BI tools. In some cases, the BI engineer also models the data.

- Business Owner – There needs to be a business owner who understands the business needs for data and subsequent reporting and analysis. This to ensure accountability, actionability as well as ownership for data quality and data utility based on the data model. The business owner and project sponsor are responsible for reviewing and approving the data model as well as the reports and analysis that OLAP will generate. For larger, enterprise-wide insights creation and performance measurement, a governance structure should be considered to ensure cross-functional engagement and ownership for all aspects of data acquisition, modeling and usage: reporting, analysis.

- Data Analyst / Business Analyst – Often a business analyst or more recently, data analyst are responsible for defining the uses and use cases of the data, as well as providing design input to data structure, particularly metrics, business questions / queries and outputs (reports and analyses) intended to be performed and improved. Responsibilities also include owning the roadmap for how data is going to be enhanced to address additional business questions and existing insights gaps.

Common Business Processes Associated with Data Fabric

The process for developing and deploying Data Fabric is as follows:

- Access – Data, often in structured ready-to-analyze form and is made available securely and available to approved users, including insights creators and enablers.

- Profiling – Data are reviewed for relevance, completeness and accuracy by data creators and enablers. Profiling can and should occur for individual datasets and integrated data sets, both in raw form as was a ready-to-analyze structured form.

- Preparation – Data are extracted, transformed, modeled, structured and made available in a ready-to-analyze form, often with standardized configurations and coded automation to enable faster data refresh and delivery. Data is typically made available in an easy to query form such as database, spreadsheet or Business Intelligence application.

- Integration – When multiple data sources are involved, integration involves combining multiple data sources into a single, structured, ready-to-analyze dataset. Integration involves creating a single data model and then extracting, transforming and loading the individual data sources to conform to the data model, making the data available for querying by data insights creators and consumers.

- Extraction / Aggregation – The integrated dataset is made available for querying, including, including aggregated to optimize query performance.

- Analyze – Process of querying data to create insights that address specific business questions. Often analysis is based on queries made using business intelligence tools using a structured database that automate the queries and present the data for faster, repeated use by data analysts, business analysts and decision-makers.

- Synthesize – Determine the key insights that the data are indicating, and determine the best way to convey those insights to the intended audience.

- Storytelling / Visualize – Design of data storyline / dashboards and visuals should be prepared and then developed based on the business questions to be addressed and the queries implemented. Whether working in a waterfall or agile context, it is important to think about how the data will be presented so that the results are well understood and acted up.

- Publish – Results of queries are made available for consumption via multiple forms, including as datasets, spreadsheets, reports, visualizations, dashboards and presentations.

Common Technologies associated with Data Fabric

Technologies involved with the Data Fabric are as follows:

- Data Products – Data Products are a self-contained dataset that includes all elements of the process required to transform the data into a published set of insights. For a Business Intelligence use case, the elements are data set creation, data model / semantic model and published results, including reports, analyses that may be delivered via spreadsheets or BI application.

- Data Preparation – Data preparation involves enhancing it and aggregating it to make it ready for analysis, including to address a specific set of business questions.

- Data Modeling – Data modeling involves creating structure and consistency as well as standardization of the data via adding dimensionality, attributes, metrics and aggregation. Data models are both logical (reference) and physical. Data models ensure that data is structured in such a way that it can be stored and queried with transparency and effectiveness.

- Database – Databases store data for easy access, profiling, structuring and querying. Databases come in many forms to store many types of data.

- Data Querying – Technologies called Online Analytical Processing (OLAP) are used to automate data querying, which involves making requests for slices of data from a database. Queries can also be made using standardized languages or protocols such as SQL. Queries take data as an input and deliver a smaller subset of the data in a summarized form for reporting and analysis, including interpretation and presentation by analysts for decision-makers and action-takers.

- Data Warehouse – Data warehouses store data that are used frequently and extensively by the business for reporting and analysis. Data warehouses are constructed to store the data in a way that is integrated, secure and easily accessible for standard and ad-hoc queries for many users.

- Data Lake – Data lakes are centralized data storage facilities that automate and standardize the process for acquiring data, storing it and making it available for profiling, preparation, data modeling, analysis and reporting / publishing. Data lakes are often created using cloud technology, which makes data storage very inexpensive, flexible and elastic.

- Data Catalog – These applications make it easier to record and manage access to data, including at the source and dataset (e.g. data product) level.

- Semantic Layer – Semantic layer applications enable the development of a logical and physical data model for use by OLAP-based business intelligence and analytics applications. The Semantic Layer supports data governance by enabling management of all data used to create reports and analyses, as well as all data generated for those reports and analyses, thus enabling governance of the output / usage aspects of input data.

- Data Governance Tools – These tools automate the management of access to and usage of data. They can also be used to manage compliance by searching across data to determine if the format and structure of the data being stored complies with policies..

- Business Intelligence (BI) Tools – These tools automate the OLAP queries, making it easier for data analysts and business-oriented users to create reports and analyses without having to involve IT / technical resources.

- Visualization tools – Visualizations are typically available within the BI tools and are also available as standalone applications and as libraries, including open source.

- Automation – Strong emphasis is placed on automated all aspects of the process for developing and delivering integrated data sets from hybrid-cloud environments.

Trends / Outlook for Data Fabric

Key trends for the Data Fabric are as follows:

Semantic Layer – The semantic layer is a common, consistent representation of the data used for business intelligence used for reporting and analysis, as well as for analytics. The semantic layer is important, because it creates a common consistent way to define data in multidimensional form to ensure that queries made from and across multiple applications, including multiple business intelligence tools, can be done through one common definition, rather than having to create the data models and definitions within each tool, thus ensuring consistency and efficiency, including cost savings as well as the opportunity to improve query speed / performance.

Automation – Increase emphasis is being placed by vendors on ease of use and automation to increase ability to scale data governance management and monitoring. This includes offering “drag and drop” interfaces to execute data-related permissions and usage management.

Observability – Recently, a host of new vendors are offering services referred to as “data observability”. Data observability is the practice of monitoring the data to understand how it is changing and being consumed. This trend, often called “dataops” closely mirrors the trend in software development called “devops” to track how applications are performing and being used to understand, anticipate and address performance gaps and improve areas proactively vs reactively.

AtScale and Data Fabrics

AtScale’s semantic layer improves data fabric implementation by enabling faster insights creation via rapid data modeling for AI and BI, including performance via automated query optimization. The Semantic Layer enables development of a unified business-driven data model that defines what data can be used, including supporting specific queries that generate data for visualization. This enables ease of tracking and auditing, and ensures that all aspects of how data are defined, queried and rendered across multiple dimensions, entities, attributes and metrics, including the source data and queries made to develop output for reporting, analysis and analytics are known and tracked.

Additional Resources

NEW BOOK