April 13, 2023

Actionable Data Insights for Improved Business Results

There are six key areas for effectively consuming data for business insights: data, access, model, analyze, consume, and insights. For organizations looking to advance in each of these areas, AtScale’s Data and Analytics Maturity Model Workshop explains how teams can build their skills and knowledge.

In this blog post covering module five of the workshop, we discuss what DataOps is and how to implement an effective DataOps strategy. We also dive into the benefits of DataOps and discuss how a semantic layer can support it.

What is DataOps?

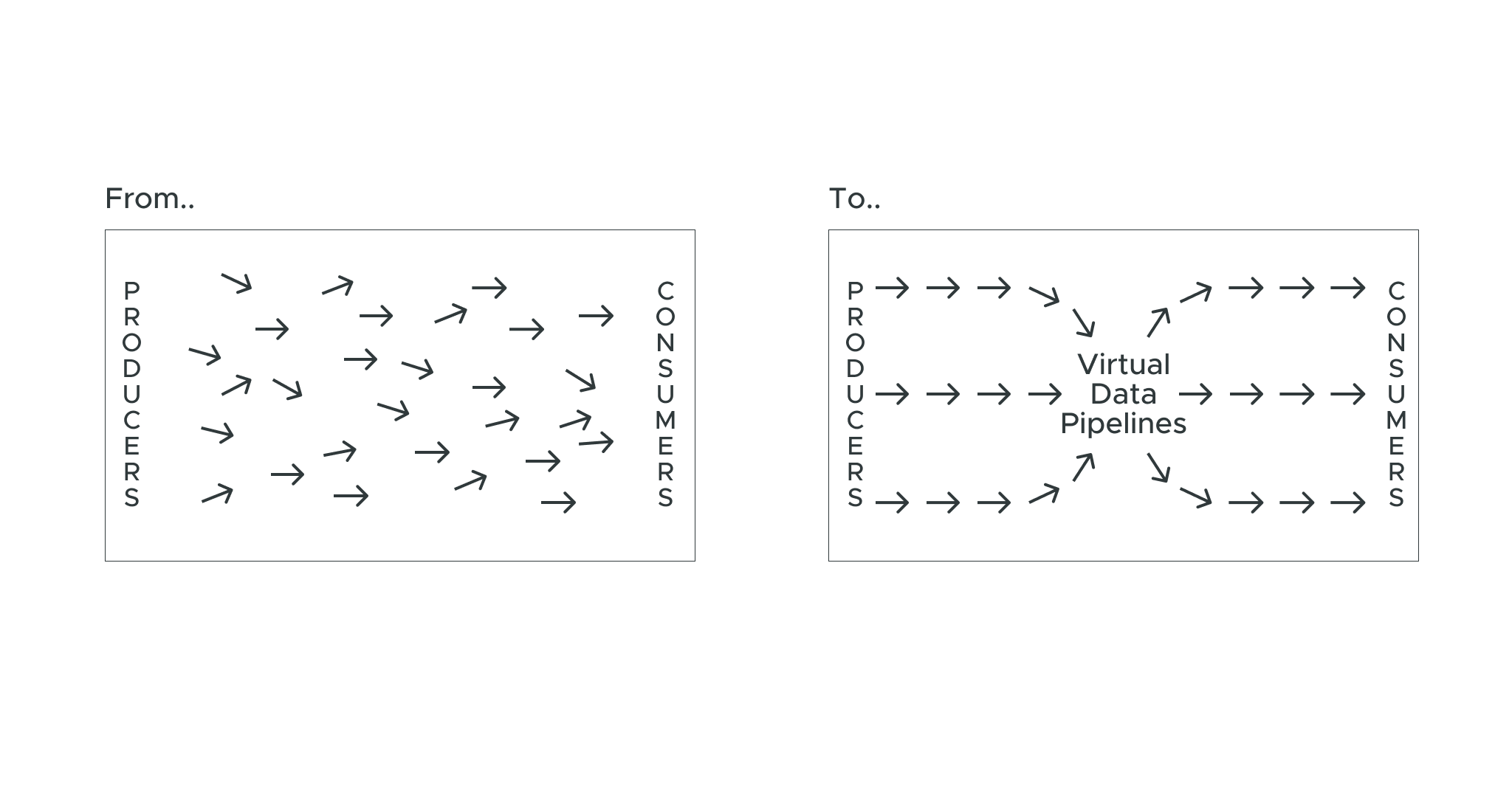

DataOps is the most advanced technique within the data and analytics maturity model for managing data access and data pipelines. According to Gartner, DataOps is a collaborative data management practice that focuses on improving communication, integration, and automation between data producers and data consumers across an organization.

Enterprises with a lower level of data access maturity rely on data extracts or extensive ETL/ELT processes, which prevent the delivery of live data to end users. Virtualization may enable users to access raw data in an analytics-ready way in real-time, but DataOps can take this a step further by improving collaboration between data teams and business teams.

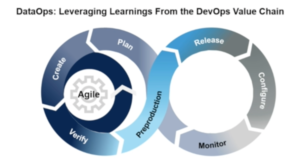

The idea behind DataOps is to manage data pipelines in a similar way that the software development lifecycle (SDLC) is managed using the DevOps approach. That means taking the same Agile development techniques for planning, releasing, and monitoring software, and applying them to the data pipeline value chain. These techniques include automation, version control, continuous iteration, quality testing, and more.

The Key Pillars for DataOps Success

There are four key pillars for implementing an effective DataOps approach:

- Adopting data integration as code by using data integration tools that can be managed as code rather than a manual approach for managing the data pipeline. This enables version control and CI/CD techniques that treat data as a product that’s delivered to data consumers.

- Leverage continuous integration and continuous deployment (CI/CD) by implementing automated tooling to continuously develop and maintain data assets. This helps organizations accelerate and streamline the delivery of relevant data to data consumers.

- Improving collaboration by bringing together teams across the organization to communicate more effectively. Along with collaboration tools, common metadata definitions and shared metrics can improve data consistency throughout the business.

- Enhancing monitoring by tracking data usage and performance for data pipelines and user query behavior in a similar way to tracking product usage. This enables organizations to better estimate the business impact of data.

The key to DataOps and a great self-service data architecture is to think about data assets as products — just like you’d think about creating software products. By implementing these four pillars, organizations can deliver high-quality data products to data consumers more efficiently.

The Benefits of DataOps

For most organizations, it’s challenging to scale the process of turning raw data into valuable business insights. Oftentimes, business users rely on data engineers to get them the data they need manually, but these low level tasks distract data teams from working on more impactful data initiatives.

DataOps leverages automation so that building and maintaining data pipelines is easier and less labor-intensive. Any repeatable task related to data engineering can be codified and managed within an automated CI/CD process. Agile principles like continuous iteration and feedback also help data teams and business users work together more effectively to adapt to the evolving requirements of data analytics.

By implementing an effective DataOps strategy, data teams can improve the way they develop and deliver data analytics to different teams throughout an organization. In turn, this enables enterprises to democratize their data to generate smarter business insights, faster than before.

Best Practice: Integrate a Semantic Layer with a DataOps Strategy

A semantic layer can support a DataOps strategy by creating a unified set of shared metrics — or semantic model that data consumers can access through virtual data pipelines. This semantic model is defined in a programmable markup language and shares its metadata via APIs, so it’s compatible with CI/CD processes.

In short, a DataOps strategy powered by AtScale’s semantic layer helps organizations accelerate query performance, increase access to data while maintaining analytics governance, and improve the consistency of data insights across teams.

Watch the full video module for this topic as part of our Data & Analytics Maturity Workshop Series.

ANALYST REPORT